More actions

Main article: Ratings system

What's wrong what we have?

Traditional mass media is biased toward the status quo. It relies on corporate advertising, uses government sources for the bulk of its information, and is very reluctant to break out of traditional left-right boxes. Chomsky accuses it of “manufacturing consent” per the title of one of his books. The consent in this case is for a “Washington consensus” in world affairs and a general bias toward “free” market capitalism. The media, both right and left, is complicit in advancing an ideology that generally favors the upper classes at the expense of workers and the poor. Whether we adopt Chomsky’s specific views or not, most observers readily admit to the biases of traditional media, both their left or right bias and their unwillingness to present anything outside carefully demarcated left-right boundaries.

Social media is highly manipulative because it has discovered that there is profit in polarization. It promotes a model which perpetuates misinformation bubbles. This type of media is less influenced by large institutional sources and more reliant on individual contributors. Its revenue is still derived, however, from advertising of a targeted nature that depends on collecting as much personal information from users as possible. Therefore its algorithms seek to keep users engaged by directing content to them that feeds base emotional desires rather than reasoned thought.

Bubble Prevention

Our system works by individual choice so misinformation bubbles will naturally form, just like they do on social media. So how do we work against that while still preserving choice?

First, it should be noted that our system will not have the perverse incentive that social media does to inflame its audience. If a user chooses a particular algorithm, we will not then actively feed the user content designed to entrap them. This alone should offer a significant degree of protection along with disincentivizing content providers to gin up the most inflammatory material.

Second, the software’s scoring mechanism will, at least by default, weight highly such criteria as veracity, relevance, freedom from fallacies, clarity, reasonableness, willingness to compromise, open-mindedness, etc. For practical arguments we might add return on investment, probability, and other objective measures of goodness. Users will be able to change the weights and add whatever criteria they prefer but it seems unlikely that people will consciously choose to be misinformed and made angry. And if they do, they will be doing so by choice which should mitigate their negative effects. It is one thing to passively take in content and react angrily and quite another to seek out anger for fun. Once you realize that anger is under your control, its power is reduced.

Third, since the system will provide, through good UI design, the easy ability to change algorithms and settings, users will be able to quickly venture out of any bubble they may have inadvertently created. The UI design will encourage exploration, for instance, kind of like apps that give you live feeds from any radio station in the world, or the news website allsides.com which provides left, center, and right articles. This won’t prevent anyone from settling in their bubble but, having done so, they will find it easy to break out.

Specifically, the system might offer its users the following:

- Would you like to see what the other side is saying? Provide a “what the other side believes” link.

- How does your opinion stack up against everyone else using this system?

- You rank highly in your community. Do you want to know where you’d rank in this other community?

- A scoring and trust system that can take into account anything, ie how far to the right/left are you? We can present tools to help users do this, like a quadrant diagram of political views. Users could then filter out the views outside their numerical criteria.

- A dial or dials that can be easily tuned to new viewpoints/opinions.

Confirmation Bias Prevention

Another pernicious trend on both social and traditional media is gravitating toward stories that confirm (rather than challenge) what you already believe. It is a major factor in the propagation of disinformation. Again, our system should have some answer to that without taking away choice. Several ideas come to mind:

- Slip in the other point of view from time to time.

- Have an option to remind the user that confirmation bias might be taking place. Name the bias so they can start being conscious of it.

- Have an option to “fact check” people who appear to continuously believe something wrong.

- Have educational features to help people from getting trapped in their own bias.

- Use AI to detect when debates are going off the rails or presenting lies. Like, have an AI ref. User can choose to have this or not.

Other biases

There are many other cognitive biases on social media as well as systemic and algorithmic biases. These include:

- Participation bias, the tendency of the most vocal people on a platform to skew results. Given the statistical tools at our disposal, our system can counter that by correctly weighting small minorities in the presentation of results. Users will have ready access to tools that identify influential vocal opinions as small.

- Anchoring effect, the tendency to overweight the first thing you learned about a subject. According to an Indiana University study, political biases on Twitter frequently emerge based on the political leanings of the first connections people have on the platform.

- Familiarity bias, the tendency to equate high volume with believability.

- Availability bias, the tendency to believe things which are easiest to remember.

- Authority bias, the tendency to believe an “authority” whether real or fake.

- Groupthink, a desire for cohesiveness which undermines truth and objectivity

- Halo effect, where positive reviews or interactions affect other quality judgements about someone.

The list goes on and many of these overlap with logical fallacies, as we’ve covered in the past.

What can be done about this? The IU team mentioned above developed a tool called Hoaxy to trace the source of a story to identify low quality sources. Botometer uses machine learning to detect bot accounts. Fakey is a news literacy game where players get points for sharing news from reliable sources and flagging suspicious content. The idea is that people would rather not be deceived if they can avoid it and by giving them tools they will readily use them.

The presence of a tool by itself will not change anything. The tool has to attract its audience, be easy/fun to use, and provide a quick reward for the effort expended.

Need to make it fun

Our system needs to be fun. Social media, for all it’s acknowledged flaws (even among devoted users), is fun. Talk radio, talk TV with a favorite host, is also fun. And obviously so are shows that mix comedy and political punditry. In Why Americans Hate the Media and How it Matters, Jonathan Ladd writes that the “existence of an independent, powerful, widely respected news media establishment is an historical anomaly”. He is referring, of course, to the media that existed in the mid 20th century through the 1970’s, the one we don’t quite have anymore. He goes on to define factors preventing the return to this era: “another structural impediment is market demand for more partisan or entertaining styles of news”. He concludes that “the United States should strive for a balance between a highly trusted, homogeneous media establishment with little viable competition and an extremely fragmented media environment without any widely trusted information sources”.

One sees Ladd’s point but his solution is somewhat unimaginative. One would expect a journalism professor writing in 2012 to understand the media landscape that was and is still true today. One would not expect him to see a technological solution that can merge the trusted but centralized form with an untrusted but decentralized form.

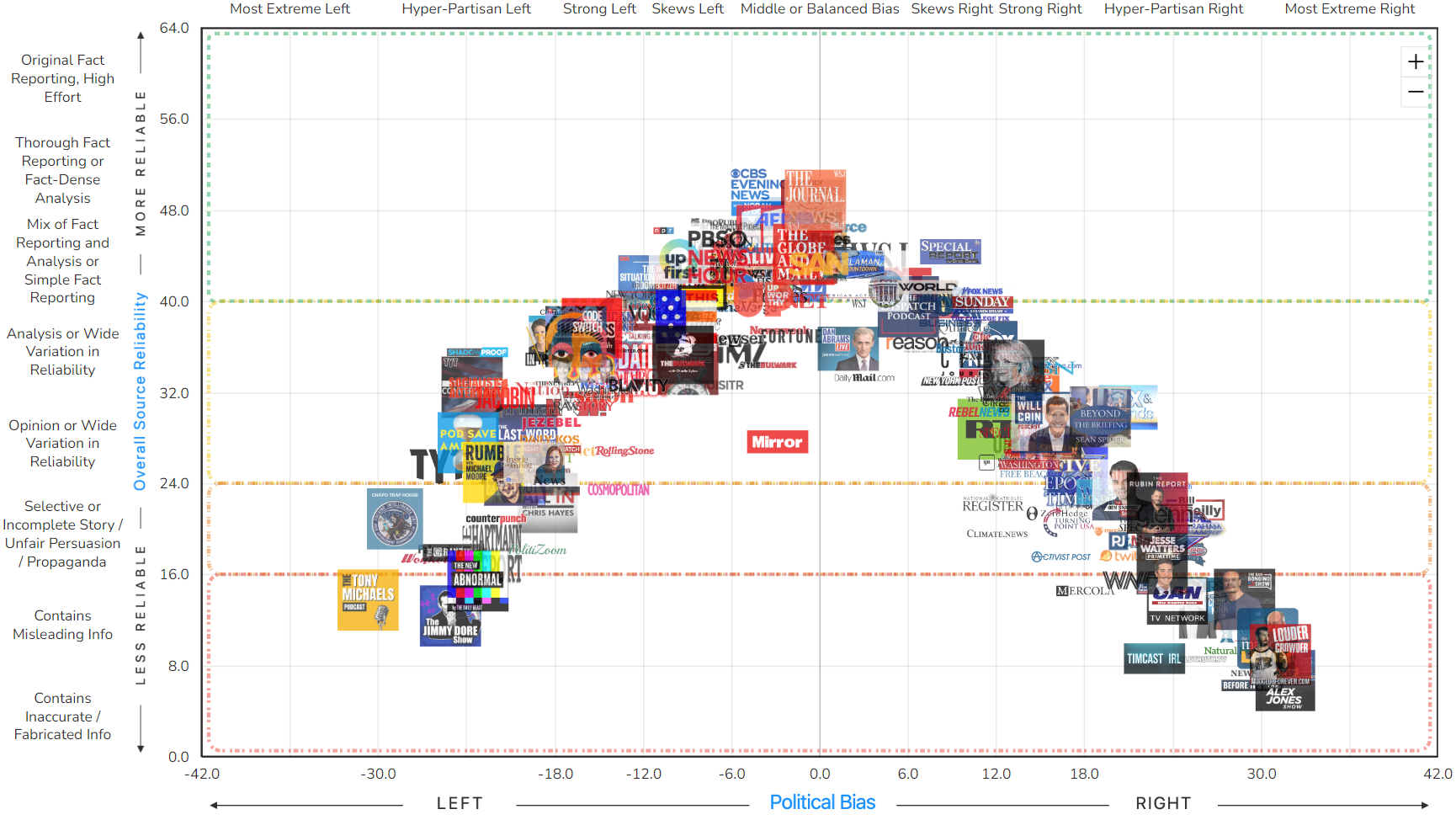

If our system is going to replace media and we need to make it fun, how might that happen? It is notable that media sources considered “middle of the road” or unbiased often have no opinion/editorial content. Here’s a chart that rates bias in major media outlets:

Among the highest ranking, eg AP News, Reuters, ABC, there is no opinion content. SAN has some but it is of very poor quality and almost deliberately avoids the most contentious issues, presumably in an effort to maintain its unbiased reputation. It would appear that the best approach is one that acknowledges bias, or openly expresses a point of view, while still airing the other sides’ views.

Our rating system will, of course, have opinion content which is a key feature of any news source precisely because it increases engagement and spurs debate. Most papers with opinion content have adjoining discussion forums where readers can participate and this idea also fits in well with our evolving design concept.

If opinion is more fun than dry news reporting, then how might we combine opinion with gamification to make it even more engaging? One idea is to have sandbox arguments where people try out their ideas to get an idea of how they would fare when presented formally. The sandbox wouldn’t count for or against the author in any ratings received so people would feel free to present in this forum first. These faux ratings could be set up as a competition between players. Debate within the sandbox could then be moved to the main forum if the authors agree.