Bayesian & non Bayesian approaches to trust and Wang & Vassileva's equation

More actions

Main article: Technical overview of the ratings system

How Bayesian Approaches Restrict our Thinking, particularly on Trust

If we adopt a non-Bayesian approach, it opens the door to many possible ways that Probability and Trust can be assigned. If you’d prefer to skip to that, just go to the next section. This section is somewhat of an essay on how/why, in a Bayesian approach, our thinking becomes more limited.

Bayes restricts us in both a hard technical sense and more generally in how we think about the information network. The Bayes eqn requires prior and posterior probabilities to make updates with. These probabilities are, presumably, the result of rigorous experimental evidence produced by others (ie experts). This isn’t, of course, strictly necessary but it is the way we usually think about it.

The probabilities can then be modified with trust but, in this context, trust is generally thought of as the competence and honesty of the person performing the test and both are assumed to be relatively high. Trust has less to do with competence in Bayesian approaches (vs averaging approaches) because they only require that the source run a test and report the result correctly. There’s competence in that, to be sure, but it involves little judgement or in-depth knowledge.

Incidentally, in previous posts, the competence was modeled as the random part of Trust () and the honesty modeled as Lies and Bias ( and ). Implicitly (but not necessarily) left out of the competence is the more important issue of the quality of the underlying experimental evidence. This is done because we assume, correctly or not, that such evidence has the backing of experts, has been peer-reviewed, etc. It is, furthermore, difficult for the layperson to independently verify this type of information. We assume, in effect, that the probabilities are right, in and of themselves, and that only the respondent can mess them up by reporting them incorrectly (or falsely).

Here’s a simple example. If we have a family gathering and ask everyone to take Covid tests before the event, we usually trust that everyone will competently follow the simple instructions and report correctly whether they saw one line or two. We also trust that they will do this honestly. We don’t question the underlying testing that was done to validate the test. However, if during our get together we ask everyone what the best economic system is, or form of government, we will likely get plenty of debate questioning the reasoning, sources, and methods folks used to arrive at their answers. Questions will be raised, in effect, about the tests people are using to determine their answer. Our Trust methodology changes, as it should, as does our method for combining everyone’s opinion (averaging instead of Bayes).

Furthermore in a Bayesian approach, because Trust is limited, we tend to limit our view of who assigns it. Indeed, so far, the requestor of information is 100% responsible for assigning Trust to the respondent it is directly querying. If you have had interactions with someone you feel pretty confident that you can assign a trust to them on questions of basic honesty and competence. This might work if all the respondent has to do is report the result of a test. However, if you then engage in a discussion with that person about weighty topics you’ll often have more doubts about how far they can be trusted, not because they are suddenly dishonest but because they may not know alot about the subject.

You might then find it useful to engage others in determining to what extent the source can be trusted on a particular question.

How Non-Bayesian Trust Approaches Might Work

The weighted average discussion last time brought out the notion that if we are not using Bayes we have a great deal of flexibility in what types of trust information could be provided, and who provides it.

Answers do not necessarily have to be given in terms of probabilities. The source would simply report an answer (True / False) and leave it to the requestor (or others) to evaluate its probability (based on Trust or some component thereof). In a system like this we could simply count up all the True answers and all the False answers and display that. A percentage True/False could be calculated and the higher number would be the “winner”.

An equation to perform this could simply use our current equations (Bayes or averaging) with 100% as the respondent’s probability. In an averaging approach this leads to the same result as simply averaging the answers. We will skip a demonstration of this because it is obvious.

A remaining issue is that of assigning Trust. We would still want trust to modify probabilities, even if they start at 100%. For instance, sources could assign a confidence to their own answers. This could be treated mathematically like Sapienza’s trust-modified probability but it differs qualitatively in the sense that now we recognize that the probability is more nuanced than our Bayesian thinking suggested. In particular it recognizes that confidence in an answer is itself a judgement that might benefit from multiple views.

More generally then, Trust, or let’s say Confidence in someone’s knowledge, can be assigned by multiple parties. The source can provide a confidence and we can evaluate that confidence on the basis of our trust. If we think they have an inflated view of their confidence (a common problem) we can adjust it downward with our own view using an averaging technique. We could weight each confidence to favor either party in performing the average.

Wang and Vassileva's Trust Equation

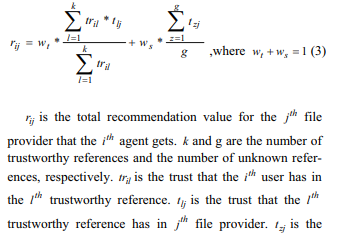

Indeed we could envision the entire community weighing in on the Trust of the source (ie respondent). A paper by Wang and Vassileva does just this and provides an Equation 3 for dealing with this:

Here we take Equation 3 and add two terms to it to represent our situation: Our source’s trust (confidence) in it’s own answer and the requesting node’s trust in the source. The paper’s apparent presumption was that a) the requesting node did not know the source at all a-priori and thus requires help to form its Trust and b) that the source would not provide a valuable assessment of itself because it is biased. Here we relax these two presumptions by noting that we may have an a-priori opinion of a source but still want help to refine it and that an honest source might indeed provide some insight into its own confidence level. We also refactor the equation slightly by using easier to understand variable names.

To understand the equation we first draw a picture of the nodes represented by it.

If we imagine many k and u nodes, the equation is,

and

where

is the weight placed on the known trustworthy references

is the weight placed on the unknown references

is the weight placed on r (our view of ourself)

is the weight placed on s (the source’s view of itself)

is the modified Trust that has in , after taking into account the other nodes’ opinions.

is the a-priori Trust that has in

is the Trust that has in

is the Trust that the known reference has in

is the Trust the unknown reference has in

is the Trust that has in itself

is the node requesting information

is the source of answer (the respondent)

is the number of known references

is the number of unknown references

is a known reference (known to )

is an unknown reference

We first note that this slightly modified equation becomes equivalent to Wang and Vassileva’s Eqn. 3 when and . We also note that the first term, in particular, has the property that when , the corresponding simply ceases to contribute.

Indeed this equation is similar in this respect to the trust-weighted averaging scheme we saw previously. Although the paper’s approach is Bayesian (presumably because they have hard performance data on file providers), we can adopt this equation for non-Bayesian purposes.

In this scheme we are effectively creating another trust network to ask the question: how much trust do we have in Node s? This information will then be used to modify the probability that Node s responds with when asked a real question by Node r.

We are, furthermore, using nodes that are unknown to us, ones that we generally don’t use to ask questions of. This is a reasonable approach, especially for distant nodes that we may not have known nodes for.

The authors use a weighting to distinguish known and unknown references. Presumably the weighting is higher for known nodes. But it would seem that we can arbitrarily make up groups of nodes in either category to further subdivide them. We could have known nodes that have particularly good skills in rating others and group them together, or have a group of “semi-known” nodes that rank in the middle. The extension of the equation to either of these cases is trivial. To some extent we have already done this by adding the node’s and as contributors to the overall Trust level.

Such a scheme implies a great deal of information from various nodes. This information could be introduced via a binning technique to present trust distributions. This would clearly be valuable in and of itself but might also provide insight into groupings that could be weighted differently. A grouping of overly biased supporters, for instance, might easily be identified given a distribution of this kind.

Numerical Example

Let’s suppose we have 5 known nodes and 10 unknown nodes as follows:

The requestor node’s Trust for it’s Known nodes is high:

But the Trust of the Known nodes for the Source node is mixed, and lower:

The Trust of the unknown nodes for the Source node, however, is higher and more consistent:

The requestor node’s own trust in its source node is:

And the source’s trust in itself is:

With a weighting distribution which emphasizes the contribution of the known nodes,

we obtain, after plugging into the equation above:

It is possible, however, for us to suspect that the known nodes are biased against the source and that perhaps the unknown nodes have a more objective opinion. In this case we might change the weighting to reflect that with a consequent increase in our overall Trust:

This snippet reproduces these calculations.

Addendum: Mediating Tss

The analysis above does not mediate the opinion except through the weighting factor applied to it. To temper this value, one likely to be larger than realistic (people often have an inflated opinion of themselves), we might try using a network similar to the one we just described above to find . In effect we are asking the question, how much should we trust s’s confidence in itself, ? We can use the same network for this without including the , or simply by setting . The same equation as above then applies.

Once we have computed the answer we can multiply it by to produce an improved value of , that is . The calculation above then proceeds with to produce the desired , a trust value which will be used to find the probability of the real question at hand.

To break this down, the calculation would proceed as follows:

- Ask the network “how much trust should we have in , s’s confidence in itself? Set and calculate a as described by the equation above.

- Calculate

- Ask the network the “real question” and calculate as described by the equation above using instead of .

- Use as you normally would, to modify the probability that the source is giving you (using Bayes or an averaging technique).

![{\textstyle T_{rk}=[0.9,0.9,0.9,0.9,0.9]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/d4818b1ed1bb1dd1b56a6d284c5194b464eb765f)

![{\textstyle T_{ks}=[0.5,0.6,0.7,0.8,0.9]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/49a2e0cc3723dc30bf3c0bb257f3c7e324141966)

![{\textstyle T_{us}=[0.8,0.82,0.84,0.86,0.88,0.9,0.92,0.94,0.96,0.98]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/ea2ed68932e4e84751760f6ba8f0bf167360c09c)