More actions

Main article: Technical overview of the ratings system

Setting up the sandbox

A new Linux VM was created to better support new algorithm code development (custom_algo.py / algorithms.py) on the PeerVerity sandbox. Some setup details follow.

- VMWare Workstation 17 Player on MS Windows 11 Pro

- Ubuntu Linux 22.04 64 bit configured with 12 GB RAM, 60 GB HD, 8 CPU (this was increased from 2 due to a problem installing Poetry, see below)

- Default setup of Ubuntu OS

- Using preinstalled system Python (3.10.6)

- Installed Pycharm Community Edition (presented by OS on startup with a bunch of other software – just click on Pycharm)

- Installed npm: (version 8.5.1) https://itslinuxfoss.com/install-npm-ubuntu-22-04/#google_vignette:

$ sudo apt install npm -y

- Installed poetry: (version 1.6.1), https://python-poetry.org/docs/

$ sudo snap install curl

$ curl -sSL https://install.python-poetry.org | python3 -

Added export PATH="/home/petermenegay/.local/bin:$PATH" to /home/petermenegay/.profile.

$ source .profile (to effectuate the new .profile changes)

- git is installed by default on system but we need to setup a key so it recognizes our gitlab repository:

$ ssh-keygen

Hit enter when prompted for things like file or passphrase (default file and empty passphrase). It will then generate a public/private key pair. The Public key is at /home/petermenegay/.ssh/id_rsa.pub

- Copy and paste contents of id_rsa.pub to our public key section of gitlab

Now, on Linux VM in home directory:

$ mkdir Peerverity

$ cd PeerVerity

Follow these instructions

$ git clone git@gitlab.syncad.com:peerverity/sandbox.git

$ pushd sandbox/client

$ npm ci

$ popd

$ pushd sandbox/server

$ poetry install

This last step will hang if the default 2 CPU’s are used in the VM configuration. Use the same number of CPU’s as the host computer (8) and it will work fine.

Place a working custom_algo.py file in the data folder and then do the following:

$ cd sandbox/server

$ ./run_server.sh 8108

NOTE: The instructions mention a run_server.py which has been apparently been replaced by run_server.sh

One more thing. After pulling the latest code from the repository you have to rebuild the client. So, starting in the sandbox directory:

$ git pull

$ cd client

$ npm ci (in case a new library was added)

$ npm run build

Now the client will contain all the latest changes.

Also, after pulling, go to the server directory and do

$ poetry install

in case anything was added to Python.

Open web browser (Firefox) and goto 0.0.0.0:8108. You should see a big circle with nothing in it and some instructions for how to use the sandbox.

Using the sandbox

The sandbox is in the midst of development and, as expected, can be a little tricky to use at first. With some guidance, however, the following tutorial was created to help establish the basic flow. Here we are creating two nodes, each with a 60% probability (60/40 distribution) and combining them via Bayes with 100% trust. We expect this combination to yield 69%.

- $ ./run_server.sh 8108

- Open browser and goto 0.0.0.0:8108

- If the constellation isn’t blank, start a new one by clicking in gray box in upper left corner and entering a name you haven’t used before, eg junk11.

- Right click inside big orange circle to establish a new node. Select it by left clicking on it so it turns yellow.

- Middle click somewhere else to establish a 2nd node and connect it to the first.

- Left click in empty space to unselect everything (go back to neutral).

- Left click on first node to select it.

- Middle click somewhere else to create a 3rd node and connect it to the first.

- Click on 2nd node to select it (turns yellow) and hit E to edit. A small blank graph area will pop up.

- Click on left edge of graph at around 60% and click again in middle at same 60% to produce a filled in area over the first half of the graph. Click in the middle again, now at 40% and then click on right edge at 40%. Should have a 60/40 graph. Right click on it so it “takes”.

- Make a similar graph for 3rd Node.

- Left click on first node to select it (turns yellow). Right click on Node 2 and hit T to edit the trust for this relationship to 1.0.

- Repeat for Node 3.

- Click on 1st node to select it.

- Hit C to run an inquiry. Two little red rectangles should flow out from Node 1 to Nodes 2 and 3 followed by two little green rectangles from Node’s 2 and 3 back to Node 1.

- Graph will appear in lower left.

- Right click in graph to scale it (so you can see it). It should look approximately like a 69/31 distribution.

Some preliminary ideas for improving the user interface:

- When you hover over a node, and results are available, bring them up in the popup below the node.

- Label the axes of the graphs.

- Allow user to pin the graph to keep it in place.

- Anchor the help guide to the right or left side.

- Should be able to delete selected things with Delete key. Also a way to delete the whole tree and start over. Apparently this is done now by just entering a new constellation name (click in gray box in upper left corner)

- Should be able to type numbers to set the opinions. Drawing the graph precisely is hard.

- Allow for an arbitrary number of bins. Currently the number of bins is set to 100.

Notes on the Interface

Some observations on the sandbox interface:

- Delete key works well.

- When I am focused on an inquiry (by middle clicking the chart) and then hover over a peer I see a chart but I think it might not be scaled (don’t see a way to scale it either).

- When charts are displayed they are on top of Help which obscures it. Help should be on top of everything.

- L key (to list selected peers open inquiries) doesn’t seem to do anything.

- Not sure what the I key does.

Coding the algorithms

We reviewed some of the algorithm code (in custom_algo.py). Some recommendations:

- Include more checks for things like .

- Round the results consistently (prevent things like 1.0000000000000001)

- Use python variable naming conventions – ie lower case within functions

- Use numpy and array math consistently to speed up algorithm once we know it works correctly.

- Need to check that intermediate_results algorithms work with mixed children/no-children nodes

- Use None instead of an empty list

Some of these changes have been implemented in the function straight_average_intermediate_privacy_multitrust_random_lying_bias_parent_child.

This includes a bugfix so it correctly handles mixed parent/child relationships at each level (see PM_083023). This means that any level can have both leaf nodes and regular nodes with children.

It also includes two functions to check input and output (PM_090123): checkinput checks that input probabilities all add up to exactly 1. round_and_check_output rounds the output and checks that output probabilities all add to exactly 1. Checks within the algorithm are also made although rounding within mathematical algorithms is not a good practice. These checks are placed at the beginning of the function and at the end, just before the output AlgorithmOutput object is created.

Trust modification for continuous algorithms using complex trust factors

For continuous algorithms, the traditional Sapienza modification for trust can be applied to the probability density function just as it would be to individual discrete probabilities (as we have been doing). However, if we use the enhanced trust model with random lying, biased lying, and bias, we need to be more careful in how we proceed.

This is because the enhanced equation differentiates between the available choices and creates one equation for each choice. For two choices (eg red, blue) there would be two slightly different modification equations. Thus for each continuous probability distribution we’d need to break up the graph into two sections and apply the appropriate equation to each section.

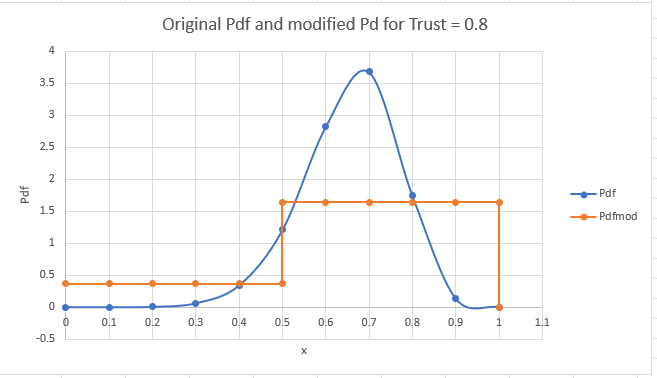

To describe the algorithm we consider an example given by an original Weibull distribution represented with 10 points. There are two choices, so the graph is divided into two sections divided at x=0.5:

The original distribution is shown in blue and the modified distribution shown in orange. The algorithm can be found in the function calc_pdfmod_from_complex_trust and is run from the main registered algorithm bayes_ave_tave_points_continuous_complex_trust. It follows five basic steps:

- Use the trust_factor in

datato establish the number of choices given by , and divide the x-axis into an even number of such choices (aka bins). In this case the x-axis choices are delimited by [0.0, 0.5, 1.0]. The functionget_x_choices_fromTperforms this step. - For each choice, find the probability by integrating the given probability distribution between the x-axis choices given in 1. The function

calc_p_choicesdoes this. - For each probability given in 2, find the modified probability using the function

pmod_random_lying_bias_continuous_trust_intermediate. This function implements the ideas in the post on Modification to the Sapienza probability adjustment to include random lying, bias, and biased lying. - For each modified probability calculated in 3, find the probability density, Pdf, by dividing the probability by the x-width representing the choices (width of one of the x_choices found in 2, which in this case =0.5).

calc_pdfmod_choicesperforms this step. - Use this result to find the Pdf associated with each x point given in the original distribution. To do so, we assume that the Pdf for each point is the same as the Pdf for each choice if its x value falls withing the x limits of that choice.

calc_pdfmod_listperforms this step.

It should be noted that this algorithm can be run in an alternative mode in which the ratio of the modified probability for each choice to the original probability is used to modify the Pdf. The idea was to create a continuously varying Pdfmod, sort of like the original Pdf. However, because the ratios are different for each bin, this creates a sharp discontinuity in the curve when the bin changes. It was felt that, since discontinuities are inevitable, we should proceed with traditional looking binned distributions (which look like rectangles).

A snippet where this algorithm and other functions can be found: https://gitlab.syncad.com/peerverity/trust-model-playground/-/snippets/150