More actions

Main article: Aggregation techniques

Introduction

The simple predicate question we’ve been considering can be turned into one requiring a distribution, aka a probability density function. For example, if we ask whether it will be Sunny or Cloudy tomorrow the respondent can answer by saying:

- Sunny or Cloudy.

- % chance of each. This is, in a sense, the coarsest distribution possible and is the type of answer we have been considering so far.

- An actual distribution where we create a continuous variable, 0-1, to represent the degree of Cloudiness. For example 0 is no clouds and 1 is a densely overcast sky.

Cases 1 and 2 are the ones we’ve been assuming so far and already have the math for. Case 1, as we’ve noted previously, is a specific variant on 2 where the probabilities are assumed to be 100% or 0% (eg 100% Sunny / 0% Cloudy or vice versa). And although both 1 & 2 are specific cases of 3 in the sense that they are “distributions”, we handle them mathematically in a slightly different way than an actual distribution. Specifically, case 3 has a meaningful x-axis which represents a scale against which a probability density can be plotted. Cases 1-2 are fully discrete categories and we simply use the probabilities themselves.

This post is about Case 3. First we will discuss “binned” distributions and then move on to the fully continuous case.

Binned Distributions

This situation is very similar to the one Sapienza wrote about in his paper: https://ceur-ws.org/Vol-1664/w9.pdf.

Let’s represent the Cloudiness example with a number of discrete bins along the x-axis: 0-0.2 is sunny, 0.2-0.4 is mostly sunny, 0.4-0.6 is partly cloudy, 0.6-0.8 is overcast, 0.8-1.0 is thick overcast.

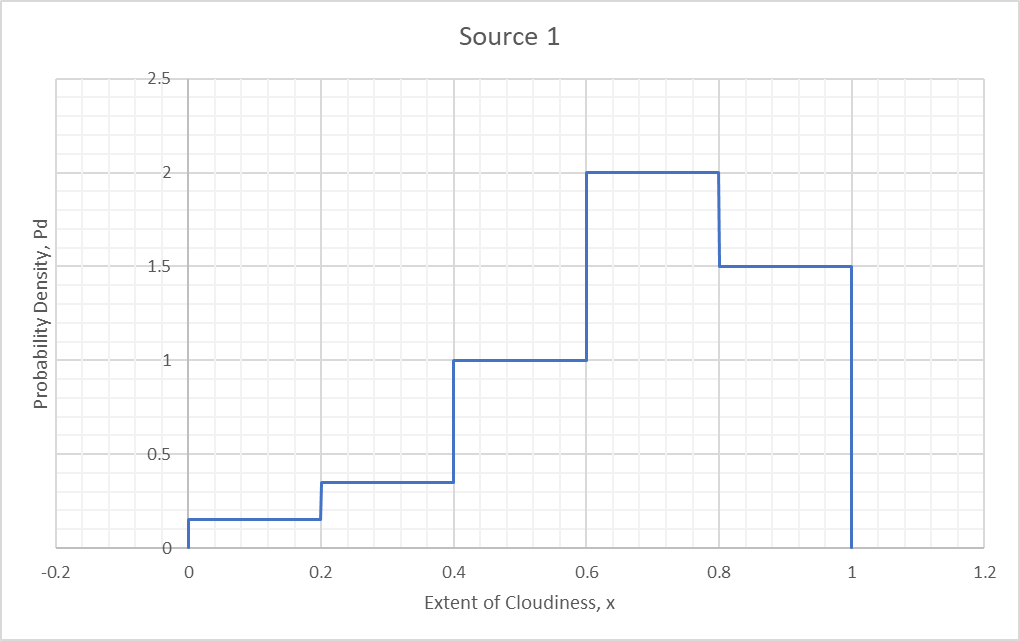

Our first source provides a distribution as follows:

This is a probability density function (PDF) and the total area under the curve is 1:

1 represents the total probability, ie that one of the outcomes in the distribution will happen. If we want to know the probability of an event lying between any two points on the x-axis, we write:

For example, the probability of overcast skies (0.6-0.8) is:

For simple cases like this one where is constant over some interval , .

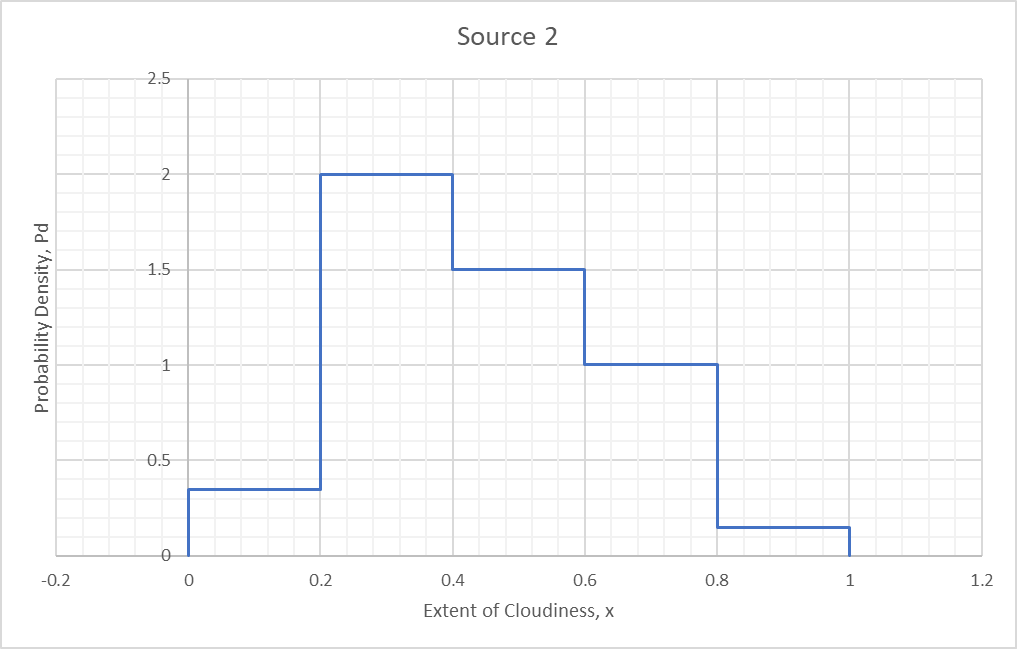

A second source produces the following distribution:

Our goal is to combine these sources via Bayes and the averaging techniques we have seen.

For the Bayesian combination we can write the combined probability density for an interval to :

For example, for the interval 0.6 to 0.8, the combined is:

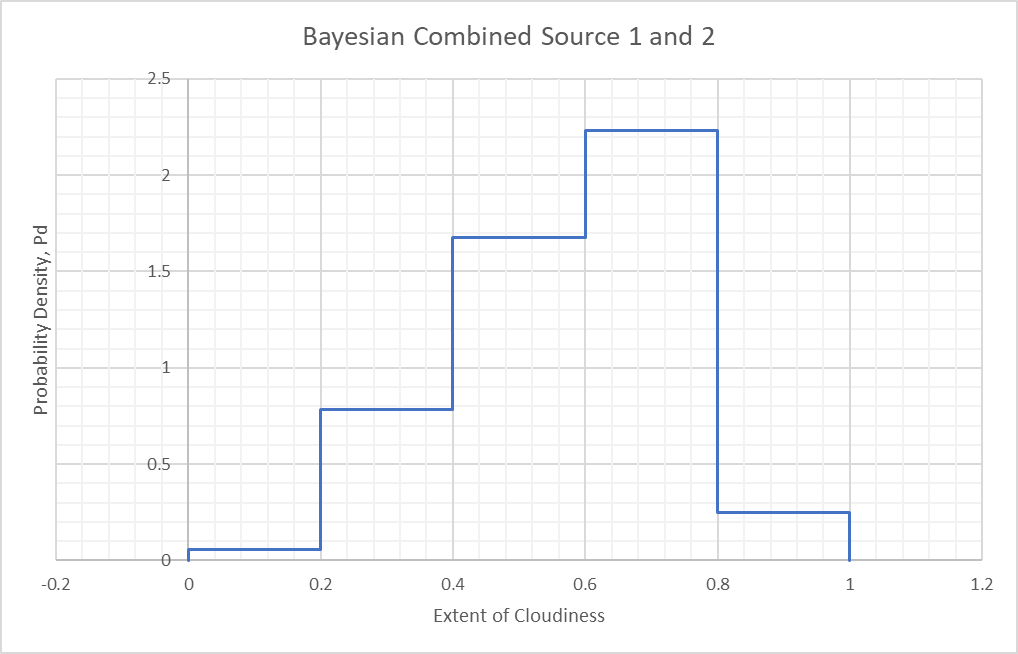

Doing this for each interval and plotting the results leads to the following graph of combined :

This graph can then be used as the updated distribution to which new source distributions can be combined in exactly the same manner. In general we see that combining distributions is no different than using the Bayes equation as we have, except that we have more sub-intervals to consider and the denominator is an integral rather than a summation of probability products.

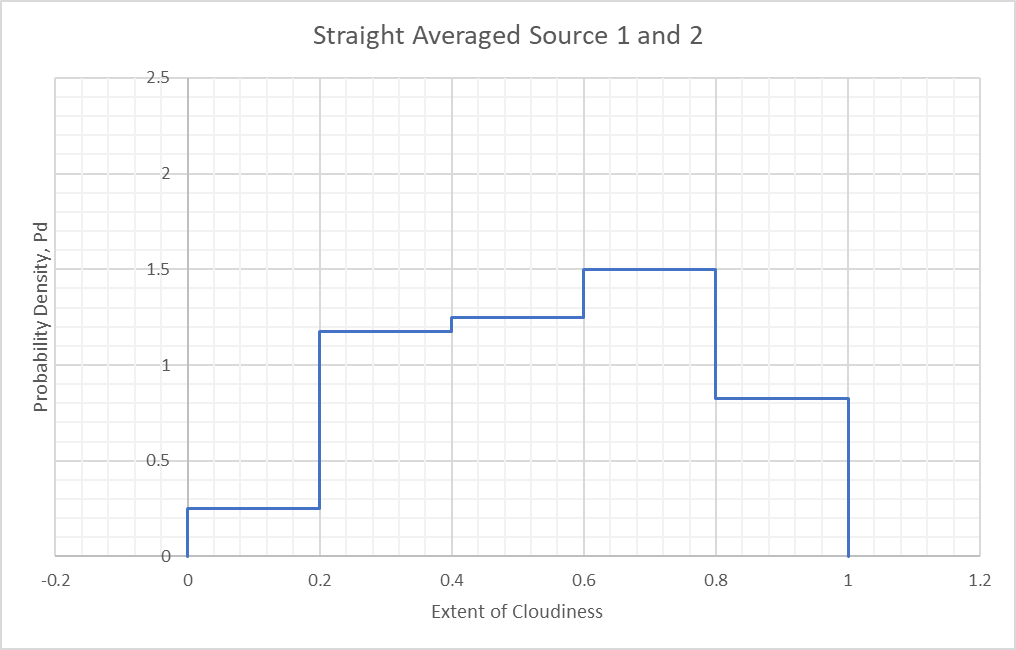

Averaging or trust-weighted averaging works exactly the same way: each sub-interval is taken and averaged to produce a new distribution. A straight average of the overcast case above leads to:

Doing this for each subinterval leads to the following distribution:

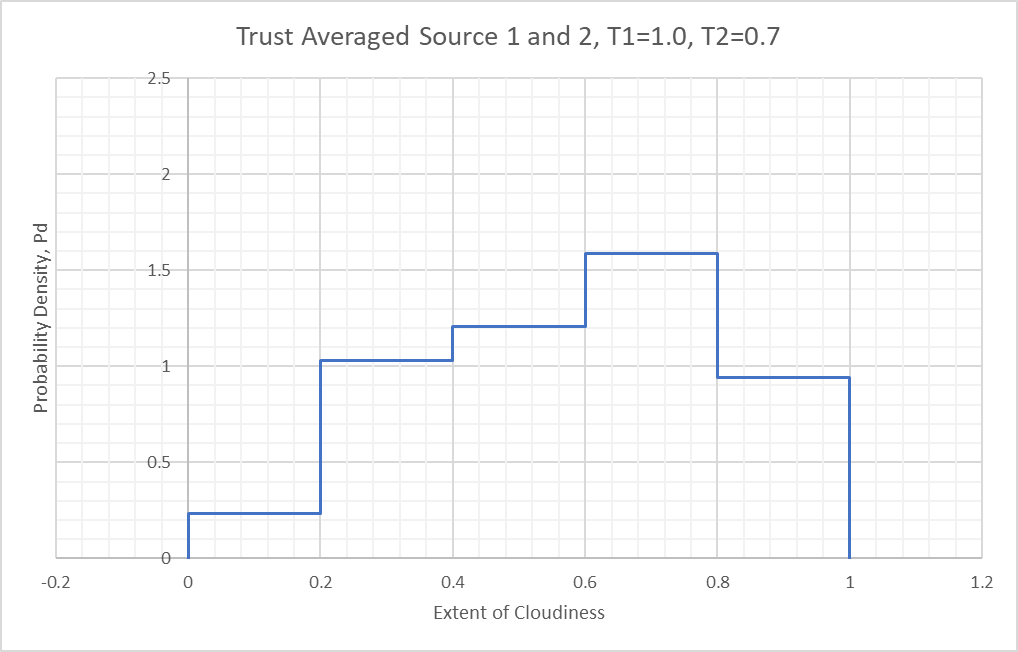

Trust weighted averaging also works the way you’d expect, with each sub-interval handling the trust-weighting and averaging scheme. Again, for the 0.6 to 0.8 sub-interval, assuming a Trust=1.0 for the 1st source and a Trust=0.7 for the 2nd Source:

Doing this over each sub-interval and plotting leads to:

This Excel spreadsheet (Sheet 1), binning.xlsx , and snippet reproduce the calculations shown here.

Continuous Distributions

The above situation was a semi-continuous distribution, piecewise if you will. For the case of a fully continuous distribution, where the source provides a function, we can largely approach it the same way, by breaking up the function into discrete sub-intervals and performing numerical integration.

Let’s suppose Source 1 answers the question with a Weibull distribution:

Where:

(known as a “shape” parameter)

(known as a “scale” parameter)

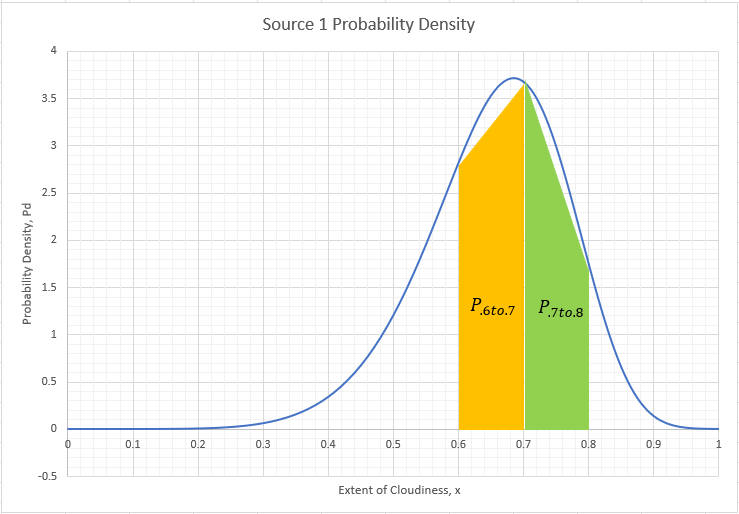

However, it is not important what the functional form of the distribution is (this is just an example) as long as the area under the curve is 1. If we plot this distribution we obtain:

As we saw above, the probability of the cloudiness extent being in any given sub-interval is the area under the curve of that sub-interval. We obtain the area by integrating numerically. If we break up the function into 10 equally spaced sections we can find the area for the sub-interval x=0.6 to 0.8 by adding the areas for the sections 0.6 to 0.7 (yellow region) and 0.7 to 0.8 (green region):

This area is not very accurate because we only used 10 sections. But if we break the function up into more sections (ie make small enough) we can obtain an accurate approximation of the area. We can then sum these small areas to find the area for any reasonably sized sub-interval.

Note that we are using trapezoidal areas here rather than the simple rectangular areas used in the binned example. This is only for the purpose of numerical accuracy. We could have used rectangular areas but then we would require more sections (a finer grid), and hence more computation, to achieve good accuracy.

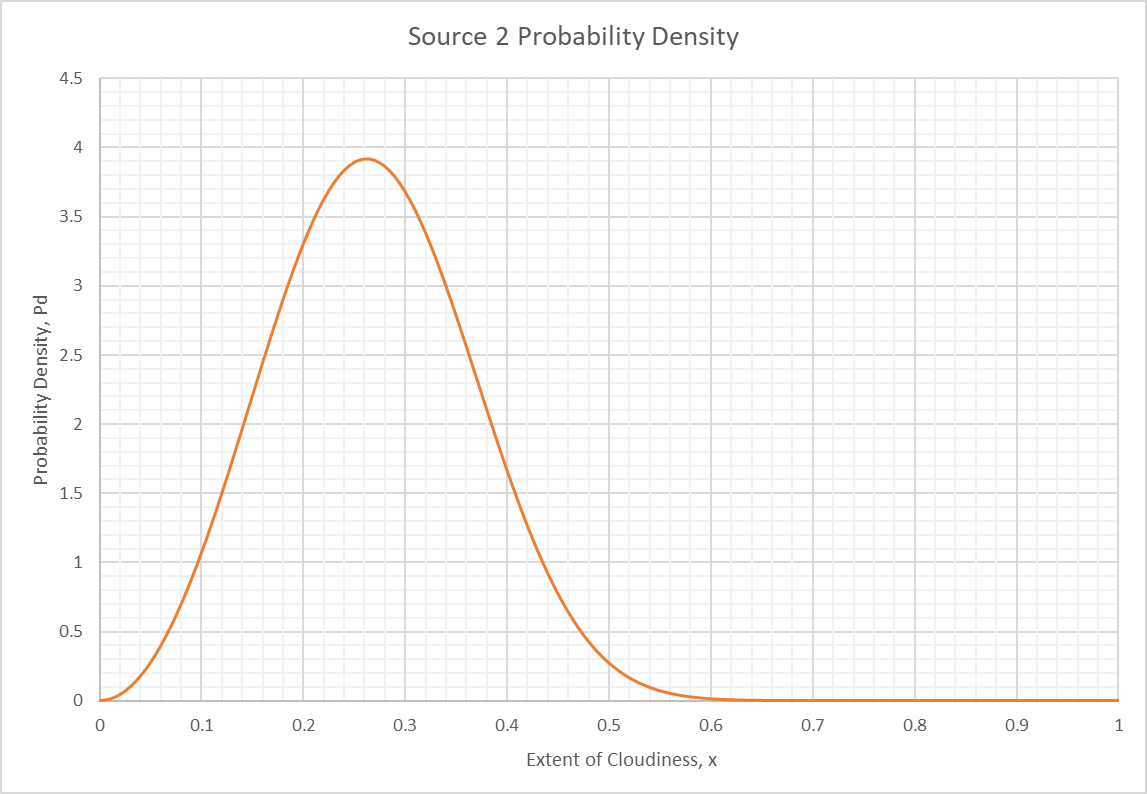

Let’s introduce a 2nd source who answers the question with a 2nd Weibull distribution but shaped and scaled a little differently:

(“shape” parameter)

(“scale” parameter)

Source 2 believes the weather tomorrow will be sunnier than Source 1. To combine these distributions via Bayes, we write:

The numerator is just the product of for each source at the desired . The denominator is the area under the curve of a function comprising the product of and . This area is found by integrating numerically using the same technique as above (with trapezoidal sections):

where

is the number of sections (=10 for example)

is the maximum value of (=1 in this case)

is the minimum value of (=0 in this case)

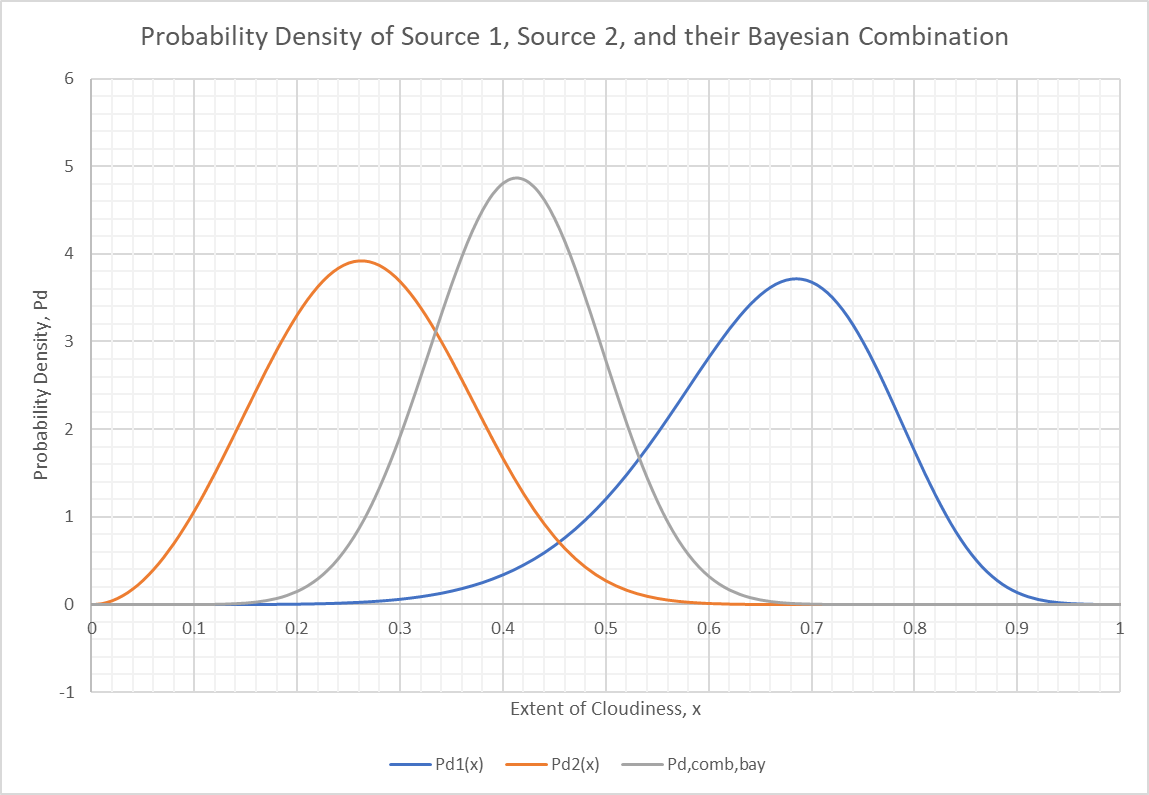

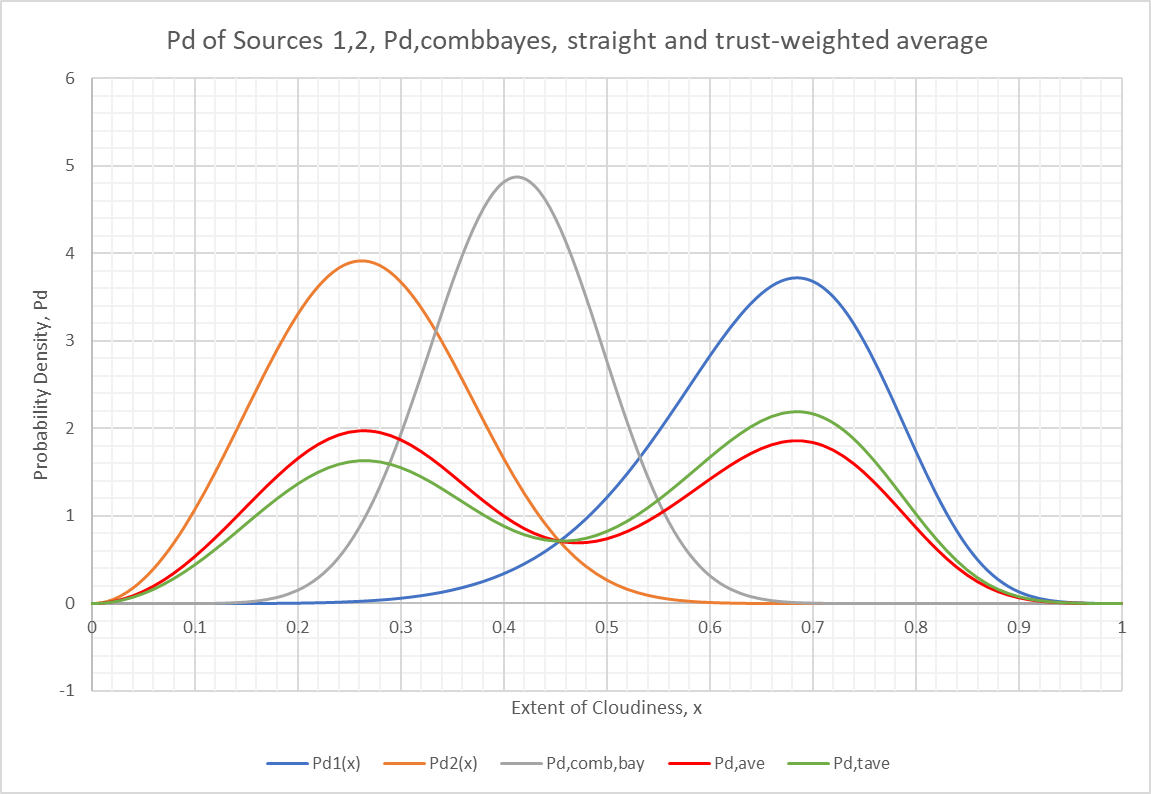

If we do this for all sections we can plot the results along with the for the first two sources for comparison.

We can calculate the straight average at (for example) and obtain:

For the trust-weighted average with and also at we have:

If we do these averages for several points, we can add the resulting plot to the plot above:

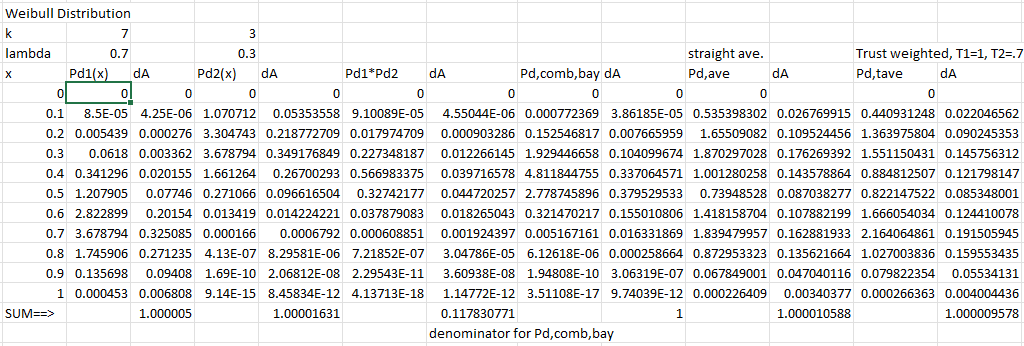

A table of the values used for the case is shown here:

The curves above were actually plotted for but the values at the points shown in this table correspond quite closely. An Excel spreadsheet to do the calculations is here (Sheet 2): binning.xlsx

Now, to find the probability of any sub-interval within any of these combined curves, we numerically integrate as shown above for Source 1. This is equivalent to adding up each section’s area, the dA values shown in the table & spreadsheet above.

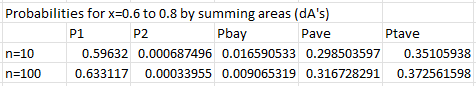

For the case of Source 1 between and we have:

This, you will notice, is the same value as that produced above when we performed the numerical integration “from scratch”.

For Source 2, we have:

For the Bayesian combination we have:

and so on. The numbers above refer to the case. We can perform these calculations for all the curves for and (values in the Excel spreadsheet) and produce the following table:

We can see that Source 1 believes, to a large extent, in overcast skies tomorrow. Source 2, however, doesn’t believe that at all. The Bayesian combination, as it tends to do, pulls in favor of certainty so produces a low probability of overcast skies. The average produces something in between and the trust weighted average favors Source 1 a little because it discounts Source 2 somewhat (Trust = 0.7).

Also notable is the fact that we obtain a small but significant change in the values as we move from to . It isn’t clear from this that we have achieved “grid independence” but we are very close. Grid independence means that refining the calculation with more steps does not lead to improved accuracy, indicating that the number of steps we have is correct. This snippet, which reproduces the calculations above, can be run at and you will see that the answers don’t change much. We should be careful to refine the grid only when necessary because it increases the cost of calculation enormously.

Summary

The key point in all this analysis is that the math we’ve developed so far applies to probability densities in exactly the same way as it does for simple probabilities. There’s just more of it because you have to break up the distribution into small increments along the x axis and do the math on each increment. But the math is the same.

We’ve covered three possibilities so far for how respondents can answer questions: 1) They can assign a single probability to their answer, 2) They can provide a binned distribution, and 3) they can provide a truly continuous distribution. They can also just answer the question without giving a probability but this is a special case of 1 where we assume a probability of 100%.

It is likely that 1 and 2 will be the preferred ways to answer. The continuous distribution, although important, seems like the vicinity of experts who perform a study or simulation to come up with their function.

Also, keep in mind that continuous distributions are only useful in cases where there is a continuous variable. The binned distribution can be used even when there isn’t a continuous variable because, for truly discrete categories, you can simply break up the x-axis into equal length sections and use the same math. This is what Sapienza did, for instance. You can also just dispense with the x-axis and use the probabilities (instead of probability densities), as we’ve been doing in the work before this post.

This brings up the question of why Sapienza chose to use a binned distribution for what was, at first glance, a discrete variable problem. One answer is that maybe he meant for his example to represent a continuous variation from good to bad weather. For simplicity, he then broke up the x-axis into equal length bins. It is certainly easier to think about continuous variations as a collection of discrete groupings. But the more likely explanation is that he did it because it is a more generalized method that works for all cases, discrete and continuous.