Modification to the Sapienza probability adjustment for trust to include random lying, bias, and biased lying: Difference between revisions

More actions

Created page with "{{Main|Trust}} In the last iteration on this subject we modified the probability adjustment for trust to include lying and bias. We noted that the lying was random in nature, that is, the lie would be distributed evenly among all options that were not the truth. Here we modify this assumption by allowing, in addition, lying that is biased toward a particular o..." |

No edit summary |

||

| Line 1: | Line 1: | ||

{{Main|Trust}} |

{{Main|Trust}} |

||

In the [[ |

In the [[Modification_to_the_Sapienza_probability_adjustment_for_trust_to_include_lying_and_bias|last iteration on this subject]] we modified the probability adjustment for [[trust]] to include lying and bias. We noted that the lying was random in nature, that is, the lie would be distributed evenly among all options that were not the truth. Here we modify this assumption by allowing, in addition, lying that is biased toward a particular outcome. In other words, if the source lies then it tries to lie by favoring a particular outcome, as long as that outcome is not the truth. If the outcome is the truth it falls back to random lying. Keep in mind that this is additional to the already established random lying which we will keep in the model for generality. |

||

<h2>The Equation</h2> |

<h2>The Equation</h2> |

||

Revision as of 17:55, 26 September 2024

Main article: Trust

In the last iteration on this subject we modified the probability adjustment for trust to include lying and bias. We noted that the lying was random in nature, that is, the lie would be distributed evenly among all options that were not the truth. Here we modify this assumption by allowing, in addition, lying that is biased toward a particular outcome. In other words, if the source lies then it tries to lie by favoring a particular outcome, as long as that outcome is not the truth. If the outcome is the truth it falls back to random lying. Keep in mind that this is additional to the already established random lying which we will keep in the model for generality.

The Equation

The equation presented here is a little easier to follow because it accounts explicitly for all possibilities: the bias can be toward any of the options and so can the lies.

Let’s begin by defining some terms, many of which are familiar from the last iteration. Again we will assume a choice between three outcomes: Red, Blue and Green.

= Number of choices (eg 3 in this case)

= number of samples (this ultimately cancels out so is only useful in the derivation)

= Probability of Red

= Probability of Blue

= Probability of Green

(just to be clear)

= Trust, ie percent of time the reported outcome is the Truth

= Random portion of (same as before)

= Lying at random portion of (same as before)

= Lying with a bias to red (the source will lie this % of time by trying to say Red as long as Red is a lie. If Red is the truth then this portion will be random lying)

= Lying with a bias to blue

= Lying with a bias to green

= Bias to red (same as before – the source will answer Red this % of time no matter what)

= Bias to blue (same as before)

= Bias to green (same as before)

(just to be clear)

The equation is as follows for any particular option, eg Green:

In general, for any particular choice among all the other choices , we can write:

To be clear, the subscript represents any choice except , the one we’re doing the for.

Derivation

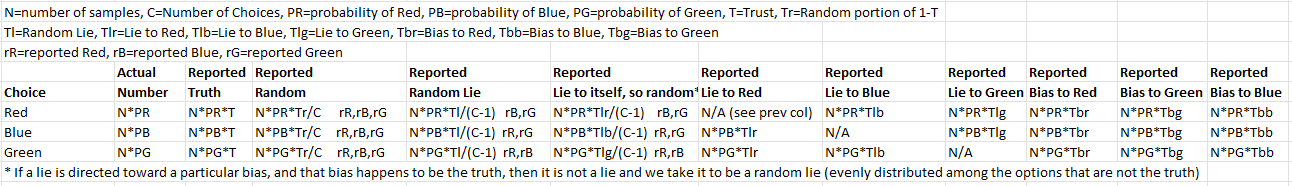

This derivation follows the last one we did in that we begin with a sample size of and a number of choices representing colors as above: Red, Blue, and Green. Instead of using a numerical example we will perform the derivation from the outset using the symbolic variable names. The following table can be created to represent the breakdown of actual and reported choices:

We choose all the cases where Green is reported, for instance. The is then the addition of all the reported Green cases divided by the total number of samples:

The N cancels and we can collect terms to obtain:

and so,

Rearranging terms leads to:

Again noting that and some further rearrangement leads to:

This is the same as the equation presented above. We note that the first four terms are the same terms from the equation presented earlier, the one with just random lying and bias. The next term represents the lie toward the Green outcome. The last term represents the lie toward the Blue and Red outcome that turn into random lies that favor Green as one outcome because they aren’t lies when applied to the Blue and Red outcomes.

Simple Numerical Example

To apply this equation we use a familiar example in keeping with the Red, Blue, and Green choices we’ve been using all along.

= 3 = Number of choices

= 120 = number of samples (this ultimately cancels out so is only useful in the derivation)

= 60% = Probability of Red

= 30% = Probability of Blue

= 10% = Probability of Green

= 70% = Trust, ie percent of time the reported outcome is the Truth

= 8% = Random portion of

= 5% = Lying at random portion of

= 2% = Lying with a bias to red (the source will lie this % of time by trying to say Red as long as Red is a lie. If Red is the truth then this portion will be random lying)

= 3% = Lying with a bias to blue

= 5% = Lying with a bias to green

= 1% = Bias to red (the source will answer Red this % of time no matter what)

= 3% = Bias to blue

= 3% = Bias to green

= 0.7(0.1) + (0.08/3) + (0.05/2)(1-0.1) + 0.05(1-0.1) + 0.03 + [0.3(0.03)+0.6(0.02)]/2 = 0.20467

Our initial 10% probability for the Green outcome now becomes 20.467% because of Trust, randomness, bias toward the Green outcome, and lies toward the Green outcome.

The following snippet reproduces this, or any similar, calculation:

https://gitlab.syncad.com/peerverity/trust-model-playground/-/snippets/139

The problem of user input

The equation above increases the amount of user input considerably. The untrustworthy part of Trust could previously be calculated as , thus requiring no user input. Now the 3 choice problem above requires 8 new pieces of information from the user beyond and the probability array .

In practice there should be ways to reduce this. The user could be asked first if there are any biases and what they are. It is likely that the bias will only be toward a few of the choices, so the remaining ones will be zero. The next question could be whether the lying always follows the bias. If it does, no separate lying input is needed. The biased lying is then simply set equal to the bias. The next question could be how much random lying takes place, with the notion that many users will set this to zero since the biased lying is more likely. Once this is done the remaining quantity, , can be calculated as follows: